How to make your content visible in AI responses (LLM, Google Insights)

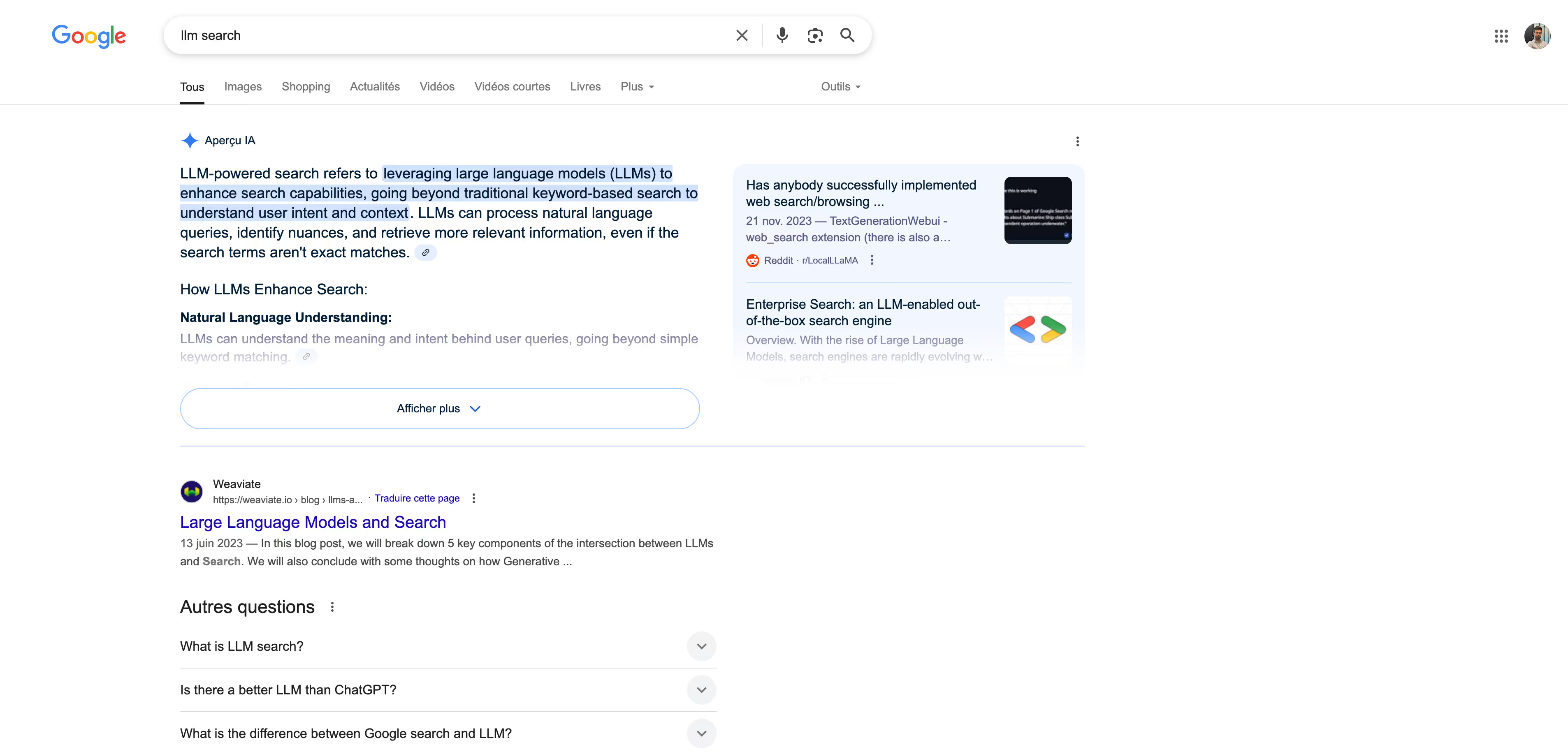

Language models like ChatGPT, Gemini, or Claude no longer just read your pages. They synthesize them, summarize them, and decide if they are worth quoting in an answer. At the same time, Google is starting to deploy a new interface: The AI Overview (AI Overview), which displays an AI-generated response at the top of the results, often without a click.

This evolution is profoundly transforming the logic of natural referencing. Now, what matters is not only the position in the SERP, but the ability of your content to be understood, reformulated and reused by an AI.

That is the objective of the GEO (Generative Engine Optimization) : adapt your content to exist in AI environments, while respecting the basics of classic SEO. Here is a clear and concrete method to achieve this.

The development of generative AIs is no longer a matter of the future. In 2025, the global market for language models (LLM) will reach 7.13 billion Canadian dollars, on the rise of 28.3% over one year (sources: The Business Research Company).

Content may be well positioned on Google but invisible to an AI. Why? Because models like GPT-4 or Gemini analyze information in 3 successive layers:

In other words: to be taken up by an LLM, it is not enough to write a good article. You have to produce content technically readable, semantically structured and cognitively usable.

Before even optimizing content for artificial intelligence, it still has to be visible and legible by the systems that power LLMs and AI engines like Gemini. This step is often underestimated, despite the fact that it determines everything that follows.

LLMs rely largely on data collected by traditional crawlers (Googlebot, Common Crawl, etc.) to structure their understanding of the web. If your content is poorly rendered, too slow, or masked by an uninterpretable JavaScript layer, It will not be indexed, taken over, or cited.

It is The basic principle of GEO : Optimization starts with accessibility.

Sites designed in React, Vue, or Angular (when they are not properly configured) rely on a logic of client-side rendering (CSR): it is the user's browser that executes JavaScript and displays the page. However, the majority of AI bots, including those used to train LLMs or generate AI Insights, do not load JavaScript or very partially.

As a result, your main content may simply be missing from the index.

Concrete solutions:

To test if your pages are readable:

Curl https://www.votresite.com/page and check if the main text appears.✅ If your main content is not displayed without JS, it will not be exploited by generative AI.

Another point that is often overlooked: AIs don't wait. Too long a load time, too complex code, or a disorganized HTML structure can hinder or interrupt the analysis of your page.

This is all the more critical because Google, in its AI Insights, Only retains content that is rendered quickly, clean, and well-formatted. Gemini prioritizes technical performance as well as semantic relevance.

Technical objectives to aim for:

Key optimizations:

loading="lazy”)<section><div><div><p>... is to be avoided)AIs better understand well-marked pages, where each section has a clear role. Disorganized HTML makes it difficult to analyze content, especially in the context of AI Insights, which need to extract a quick, structured, and reliable response.

Once your content is accessible, it must be organized so that it can be understood, extracted, and potentially reformulated by language models. Unlike a human reader who scans a page visually, an LLM isolates semantic blocks in order to interpret them. It cuts, rewrites, synthesizes.

In other words: good GEO content is not only readable. He is reformulable.

Generative models seek quick and explicit answers. When a user interacts with an LLM or triggers an AI Overview in Google, the aim of the algorithm is to provide a clear, synthetic and, if possible, immediate response.

This assumes that your content is starting by the answer, not by the introduction.

Example:

❌ “SEO has undergone many changes over the years...”

✅ “Generative Engine Optimization (GEO) consists in structuring content so that it can be understood and cited by an AI like ChatGPT or Gemini.”

This so-called “inverted funnel” structure (from the most useful to the most developed) allows the AI to directly extract the essentials while maintaining the possibility of developing the details downstream.

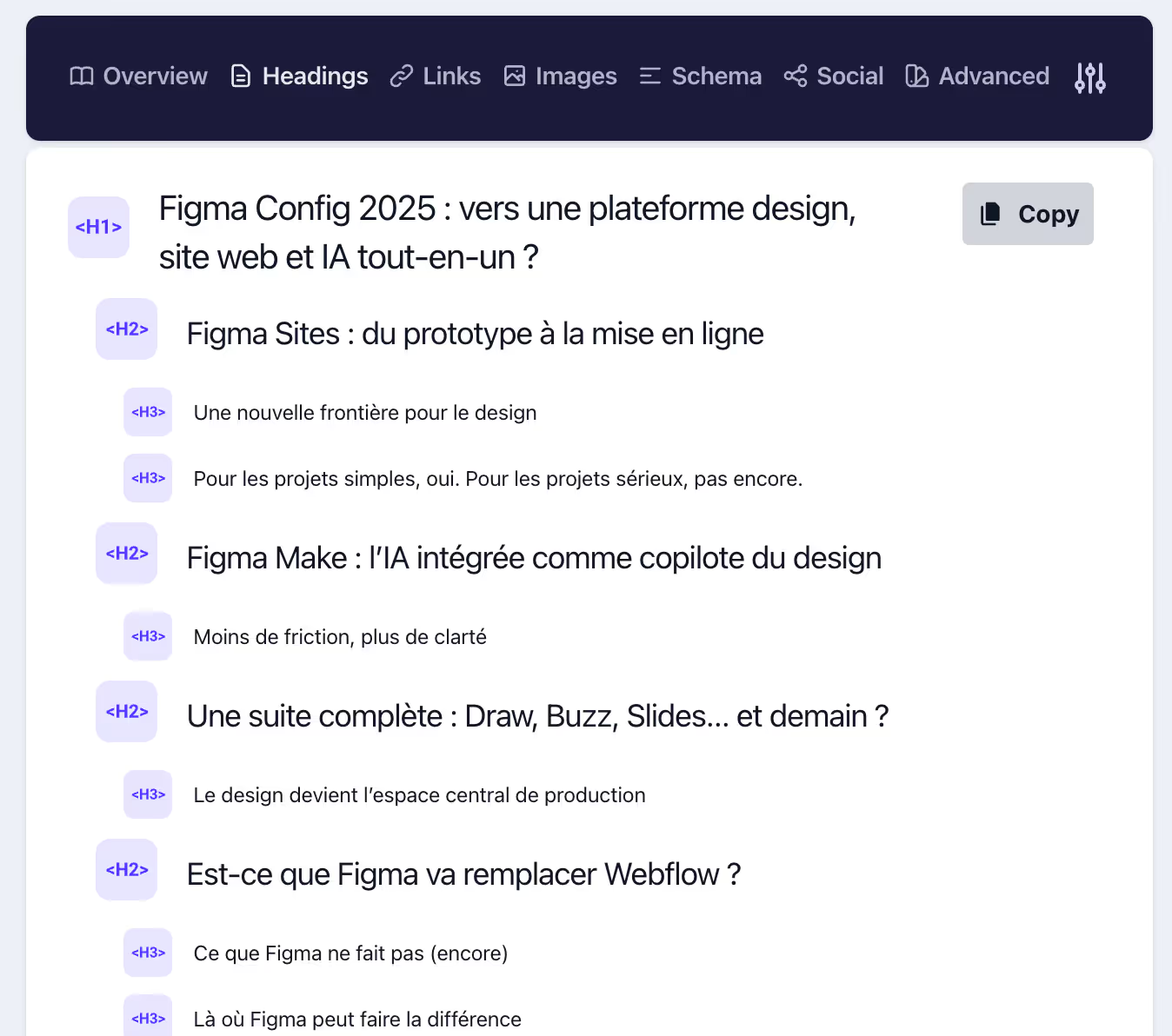

AIs rely heavily on title tags (<h1>, <h2>, <h3>) to understand the organization of content.

Best practices:

This creates a clear mind map that the AI can follow to detect key passages that need to be reformulated.

AIs don't read style, they read structure. A paragraph that is too long, an idea drowned in verbiage, or a vague formulation hinder extraction.

Editorial objectives:

Avoid:

Some content structures are better exploited by AIs because they facilitate reformulation or synthesis. It is essential to integrate them naturally into your articles.

These formats have a double benefit: they improve the reading experience for the user and increase the probability of inclusion in the responses generated.

LLM-ready content is not based solely on its content. It should also contain segments Copyable and pastable directly in the response of an AI assistant.

✅ Example of a sentence that can be used by an AI:

“Google AI Insights are triggered for over 13% of queries in the United States in early 2025.”

❌ Useless sentence for an LLM:

“This point will be shone in more detail later in the article.”

In practice, this means regularly integrating:

In a web saturated with similar content, LLMs seek to sort things out. Their aim is not only to summarize a well-structured page but to identify the sources that really contribute something new or reliable.

Content that simply repeats what we already find elsewhere, even well presented, is very unlikely to be taken up by GPT chat, Gemini, or in an AI Preview.

On the other hand, original information, specific statistics or precise feedback can become anchors for a generated response. This is where editorial strategy comes into its own.

Language models favor content that demonstrates a real expertise because they are the ones who enrich their ability to respond.

This does not mean producing “complex” content, but content rooted in the reality of your business, which AI won't be able to find elsewhere.

Concrete examples:

These elements have two benefits:

AIs work as trusted mediators: they need to know only what they reuse is based on verifiable data.

So you need to do what few SEO writers still do today: cite your sources correctly.

Best practices to follow:

✅ Example of a well-written quote:

“At the beginning of 2025, AI Insights were displayed for around 13% of queries in the United States based on the latest available data.”

❌ To avoid:

“Numerous studies show that...” or “According to some sources...”

This type of vague wording is ignored or even “sanctioned” by the most recent models, which emphasize precision and editorial responsibility. In 2024, 72% of the 25 most popular queries on Google included a brand explicitly mentioned by the user (sources: Search Engine Land). A sign that Internet users are looking for identified and contextualized content and that AIs tend to favor names that are clear, well-structured and easily cited.

Models like Gemini or Claude don't just assess the raw content: they also analyze the editorial context, including the perceived credibility of the author.

On an LLM-First optimized page, the author should not be an anonymous field in the metadata. He must appear clearly, with associated authority elements.

This reinforces the E-E-A-T (Experience, Expertise, Expertise, Authoritativeness, Trust) signal, which has become central to the Google algorithm and to the scoring of generative AIs.

Language models are giving increasing importance to freshness of information. Not only because they incorporate recent corpora (in particular Gemini, Claude, GPT-4 Turbo), but also because they seek to avoid relaying obsolete data.

Content that is explicitly updated will be considered more reliable than content that has remained static for two years, even if it is still valid.

What you need to do:

“Article updated on July 14, 2025"

You can also schedule editorial updates every 6 to 12 months to stay on the AI radar.

The AI Overview (AI Overview) changes the way the results are displayed: the user gets a summary generated by AI (via Gemini) who answers his question directly, without necessarily clicking.

According to BrightEdge, as of May 2025, more than 11% of requests now trigger an AI Preview, which is an increase of 22% in one year (sources: BrightEdge). A clear signal: these displays are being deployed massively... and remain shorter, more accurate.

Google builds this response in four steps:

A page positioned in 8th or 10th place can be included if it is structured, clear and verifiable.

If you are in local or B2B SEO, also take care of your Google Business Profile sheet, your opinions and your location-based data, AIs frequently draw on the local answers in the AI Overview.

According to Grand View Research forecasts, the global LLM market is expected to grow by 36% by 2030 with an expected acceleration in the health, finance and B2B content sectors (sources: Grand View Research). SEO is no longer just about position. With the rise of LLMs like ChatGPT, Gemini or Claude and the massive integration of AI Insights into Google, the ability to be understood, reformulated, and cited by an AI is becoming a determining criterion of visibility.

This requires a change in posture: writing to be found is no longer enough, you have to write to be. utilized by AI. This is what Generative Engine Optimization (GEO) is all about.

We incorporate this new requirement from the design stage:

Do you need expert support to adapt your SEO strategy to AI? Contact us here →

An LLM (Large Language Model) is an artificial intelligence capable of understanding and generating natural language. It no longer simply indexes the web like a traditional search engine: it reformulates content to produce complete answers. This changes the SEO logic: You now have to write so that AIs can extract and reuse your content.

Traditional SEO aims to position yourself well in the SERP. The GEO (Generative Engine Optimization) aims to appear in a AI-generated response. The first works on indexing and ranking, the second on comprehension, rephrasing, and quoting by an LLM.

It is still difficult to track it down precisely, but there are some signs that can alert you:

No, but pages with a high level of informational importance (articles, guides, sectoral pages, resources) must be designed to be reformulated and extracted. Transactional or legal pages remain outside of this logic.

Yes. The beacons FAQ page, Article, HowTo, and Review are particularly useful. They facilitate analysis by AI models, especially in cases of automatic reformulation or partial citation by Gemini. These tags can be tested and validated via the tool Google Rich Results Test.